After her mother’s death, Sirine Malas was desperate for an outlet for her grief.

“When you’re weak, you accept anything,” she says.

The actress was separated from her mother Najah after fleeing Syria, their home country, to move to Germany in 2015.

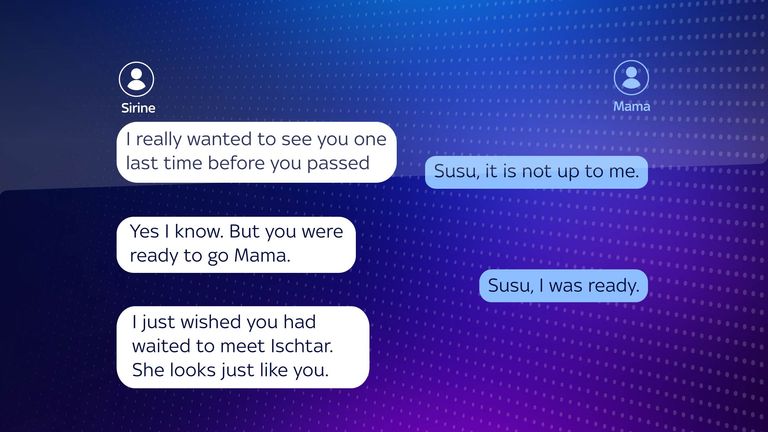

In Berlin, Sirine gave birth to her first child – a daughter called Ischtar – and she wanted more than anything for her mother to meet her. But before they had chance, tragedy struck.

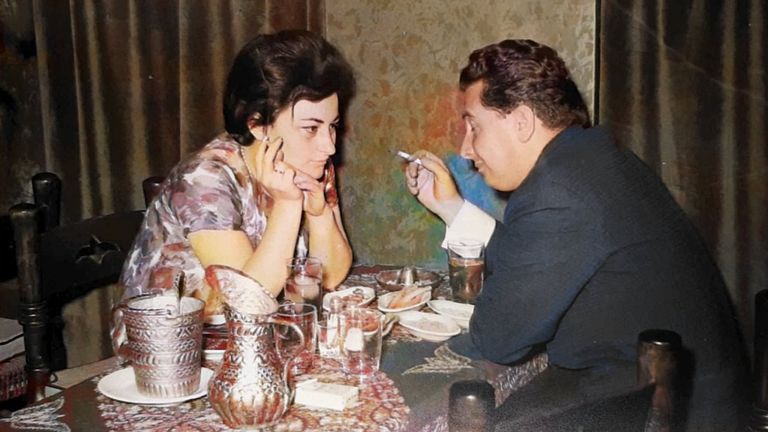

Sirine’s mother Najah

Najah died unexpectedly from kidney failure in 2018 at the age of 82.

“She was a guiding force in my life,” Sirine says of her mother. “She taught me how to love myself.

“The whole thing was cruel because it happened suddenly.

“I really, really wanted her to meet my daughter and I wanted to have that last reunion.”

The grief was unbearable, says Sirine.

Sirine and her daughter Ischtar

“You just want any outlet,” she adds. “For all those emotions… if you leave it there, it just starts killing you, it starts choking you.

“I wanted that last chance (to speak to her).”

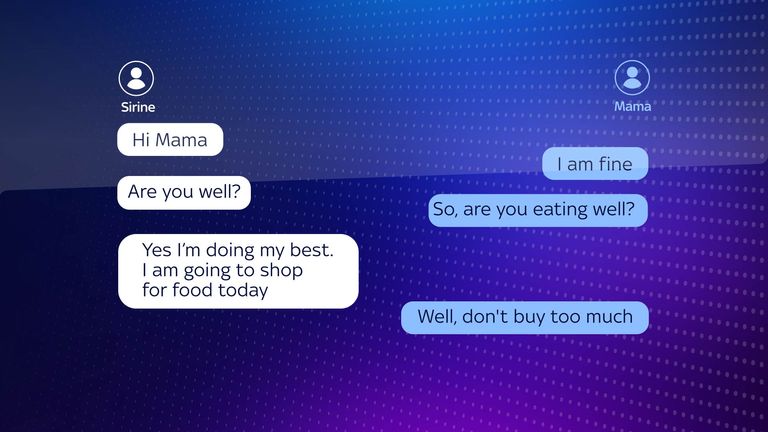

After four years of struggling to process her loss, Sirine turned to Project December, an AI tool that claims to “simulate the dead”.

Users fill in a short online form with information about the person they’ve lost, including their age, relationship to the user and a quote from the person.

Sirine says her mother was the ‘guiding force’ in her life

The responses are then fed into an AI chatbot powered by OpenAI’s GPT2, an early version of the large language model behind ChatGPT. This generates a profile based on the user’s memory of the deceased person.

Such models are typically trained on a vast array of books, articles and text from all over the internet to generate responses to questions in a manner similar to a word prediction tool. The responses are not based on factual accuracy.

At a cost of $10 (about £7.80), users can message the chatbot for about an hour.

For Sirine, the results of using the chatbot were “spooky”.

“There were moments that I felt were very real,” she says. “There were also moments where I thought anyone could have answered that this way.”

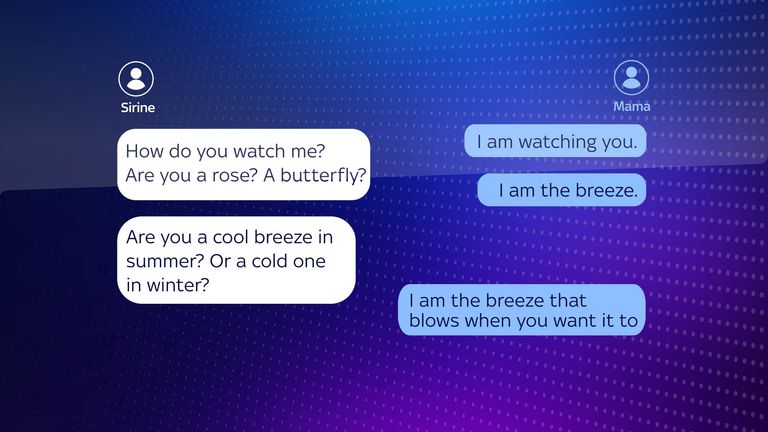

Imitating her mother, the messages from the chatbot referred to Sirine by her pet name – which she had included in the online form – asked if she was eating well, and told her that she was watching her.

“I am a bit of a spiritual person and I felt that this is a vehicle,” Sirine says.

“My mum could drop a few words in telling me that it’s really me or it’s just someone pretending to be me – I would be able to tell. And I think there were moments like that.”

Sirine’s mother and father

Project December has more than 3,000 users, the majority of whom have used it to imitate a deceased loved one in conversation.

Jason Rohrer, the founder of the service, says users are typically people who have dealt with the sudden loss of a loved one.

Jason Rohrer founded Project December

“Most people who use Project December for this purpose have their final conversation with this dead loved one in a simulated way and then move on,” he says.

“I mean, there are very few customers who keep coming back and keep the person alive.”

He says there isn’t much evidence that people get “hooked” on the tool and struggle to let go.

However, there are concerns that such tools could interrupt the natural process of grieving.

Billie Dunlevy, a therapist accredited by the British Association for Counselling and Psychotherapy, says: “The majority of grief therapy is about learning to come to terms with the absence – learning to recognise the new reality or the new normal… so this could interrupt that.”

This content is provided by Spreaker, which may be using cookies and other technologies.

To show you this content, we need your permission to use cookies.

You can use the buttons below to amend your preferences to enable Spreaker cookies or to allow those cookies just once.

You can change your settings at any time via the Privacy Options.

Unfortunately we have been unable to verify if you have consented to Spreaker cookies.

To view this content you can use the button below to allow Spreaker cookies for this session only.

👉 Listen above then tap here to follow the Sky News Daily wherever you get your podcasts 👈

In the aftermath of grief, some people retreat and become isolated, the therapist says.

She adds: “You get this vulnerability coupled with this potential power to sort of create this ghost version of a lost parent or a lost child or lost friends.

“And that could be really detrimental to people actually moving on through grief and getting better.”

Therapist Billie Dunlevy

There are currently no specific regulations governing the use of AI technology to imitate the dead.

The world’s first comprehensive legal framework on AI is passing through the final stages of the European parliament before it is passed into law, when it would enforce regulations based on the level of risk posed by different uses of AI.

Read more:

Customer service chatbot swears and calls company ‘worst delivery firm’

Fake AI images keep going viral – here are eight that have caught people out

AI drone ‘kills’ human operator during ‘simulation’

The Project December chatbot gave Sirine some of the closure she needed, but she warned bereaved people to tread carefully.

“It’s very useful and it’s very revolutionary,” she says.

“I was very careful not to get too caught up with it.

“I can see people easily getting addicted to using it, getting disillusioned by it, wanting to believe it to the point where it can go bad.

“I wouldn’t recommend people getting too attached to something like that because it could be dangerous.”