Social media algorithms are still “pushing out harmful content to literally millions of young people” six years after Molly Russell’s death, the schoolgirl’s father has said.

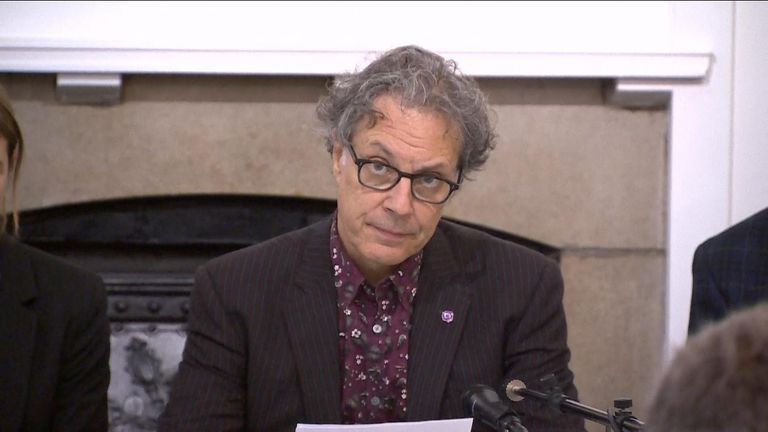

Ian Russell said a new report by the the suicide prevention charity set up in his daughter’s honour shows “a fundamental systemic failure” by tech giants “that will continue to cost young lives”.

Molly, who took her own life, aged 14, in November 2017 after viewing posts related to suicide, depression and anxiety online, would have been celebrating her 21st birthday this week.

Last September, a coroner ruled the schoolgirl, from Harrow, northwest London, had been “suffering from depression and the negative effects of online content”.

The Molly Rose Foundation said its new research shows the “shocking scale and prevalence” of harmful content on Instagram, TikTok and Pinterest, six years on from her death.

On TikTok, some of the most viewed posts that reference suicide, self-harm and highly depressive content, have been viewed and liked more than a million times, according to the charity.

The report said young people are routinely recommended large volumes of harmful content fed by high-risk algorithms.

While concerns around hashtags were mainly focused on Instagram and TikTok, fears over algorithmic recommendations also applied to Pinterest, it said.

Ian Russell has called for action to stop harmful content. File Pic

Mr Russell, who is chair of trustees at the Molly Rose Foundation, said: “This week, when we should be celebrating Molly’s 21st birthday, it’s saddening to see the horrifying scale of online harm and how little has changed on social media platforms since Molly’s death.

“The longer tech companies fail to address the preventable harm they cause, the more inexcusable it becomes.

“Six years after Molly died, this must now be seen as a fundamental systemic failure that will continue to cost young lives.

“Just as Molly was overwhelmed by the volume of the dangerous content that bombarded her, we’ve found evidence of algorithms pushing out harmful content to literally millions of young people.

“This must stop. It is increasingly hard to see the actions of tech companies as anything other than a conscious commercial decision to allow harmful content to achieve astronomical reach, while overlooking the misery that is monetised with harmful posts being saved and potentially ‘binge watched’ in their tens of thousands.”

Read more:

What is the Online Safety Bill?

The charity’s report has been created with The Bright Initiative, analysing data from 1,181 of the most engaged-with posts on Instagram and TikTok that used well-known hashtags around suicide, self-harm and depression.

The foundation said it is concerned that the design and operation of social media platforms is increasing the risk for some youngsters because of the ease with which they could find potentially harmful content by searching hashtags or recommendations.

Online Safety Act

It also suggested commercial pressures may be increasing the risks as sites compete to grab the attention of young people and keep them scrolling through their feed.

Mr Russell said the findings highlighted the importance of the new Online Safety Act and called for the new online safety regulator Ofcom to be “bold” in how it holds social media firms to account under the new laws.

The Act tasks tech companies with protecting children from damaging content and Ofcom will publish rules in the next few months around the promotion of material related to suicide and self-harm, with each new code requiring parliamentary approval before it is put in place.

A Meta spokesperson said: “We want teens to have safe, age-appropriate experiences on Instagram, and have worked closely with experts to develop our approach to suicide and self-harm content, which aims to strike the important balance between preventing people seeing sensitive content while giving people space to talk about their own experiences and find support.”

They said more than 30 tools have been built, including a control to limit the type of content teenagers are recommended, and the company will announce further measures soon.

A TikTok spokesperson said: “Content that promotes self-harm or suicide is prohibited on TikTok and, as the report highlights, we strictly enforce these rules by removing 98% of suicide content before it is reported to us.

“We continually invest in ways to diversify recommendations, block harmful search terms, and provide access to the Samaritans for anyone who needs support.”

Anyone feeling emotionally distressed or suicidal can call Samaritans for help on 116 123 or email jo@samaritans.org in the UK. In the US, call the Samaritans branch in your area or 1 (800) 273-TALK